Ponyu

Description

A trainable self-governing plant vase which moves across the home and reshapes the home landscape based on everyday human habits.

Advisor

Challenge

To re-think an object or an interface in the house, that embraces training as the primary interaction paradigm. What can that interface implicitly learn from you or from the context? What can you explicitly teach it?

Outcome

A trainable self-governing plant vase, which learns from everyday human habits and its context. Over time, a flock of such plants can reveal human's habits and patterns by shaping the landscape of the home.

Context

As a team, we were given the task to identify interfaces in our homes that we would love to make trainable. Living in Denmark, we found that people are really into having plants at their homes. But it was interesting how the plant's position also can be a determining factor how much it is fed water regularly.

The plants in the kitchen on near work desk are fed water regularly, as people usually remember to water them when they see them in front of them. This may not be true for all, but it got us interested in how the plants will behave if it has to adapt based on human behaviour.

How Ponyu works?

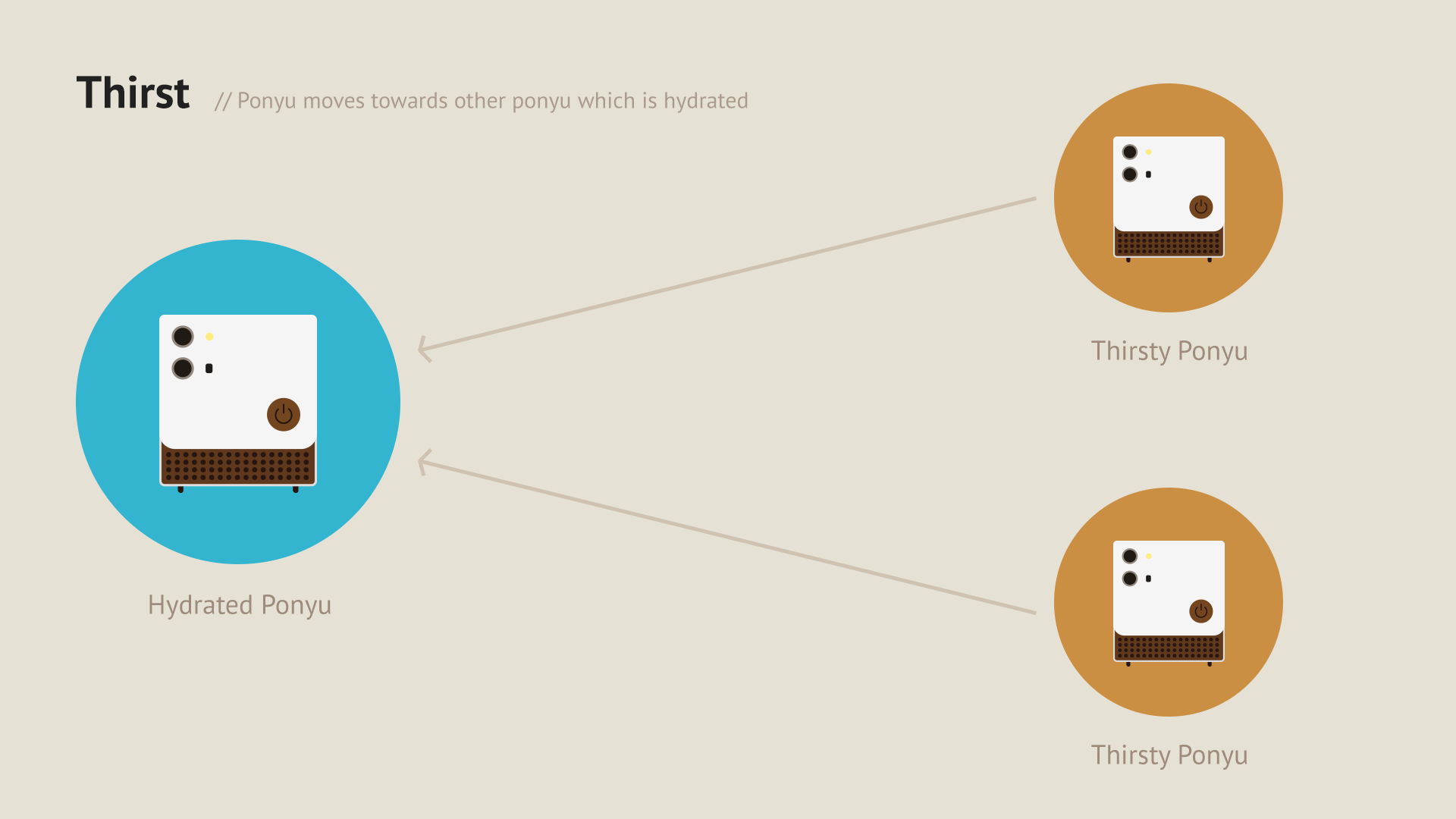

Primary Drive: Thirst

The primary drive of ponyu is thirst. Based on the plant, there is a threshold for soil moisture. Once the moisture drops below the threshold, Ponyu begins moving and finding a plant which is already hydrated.

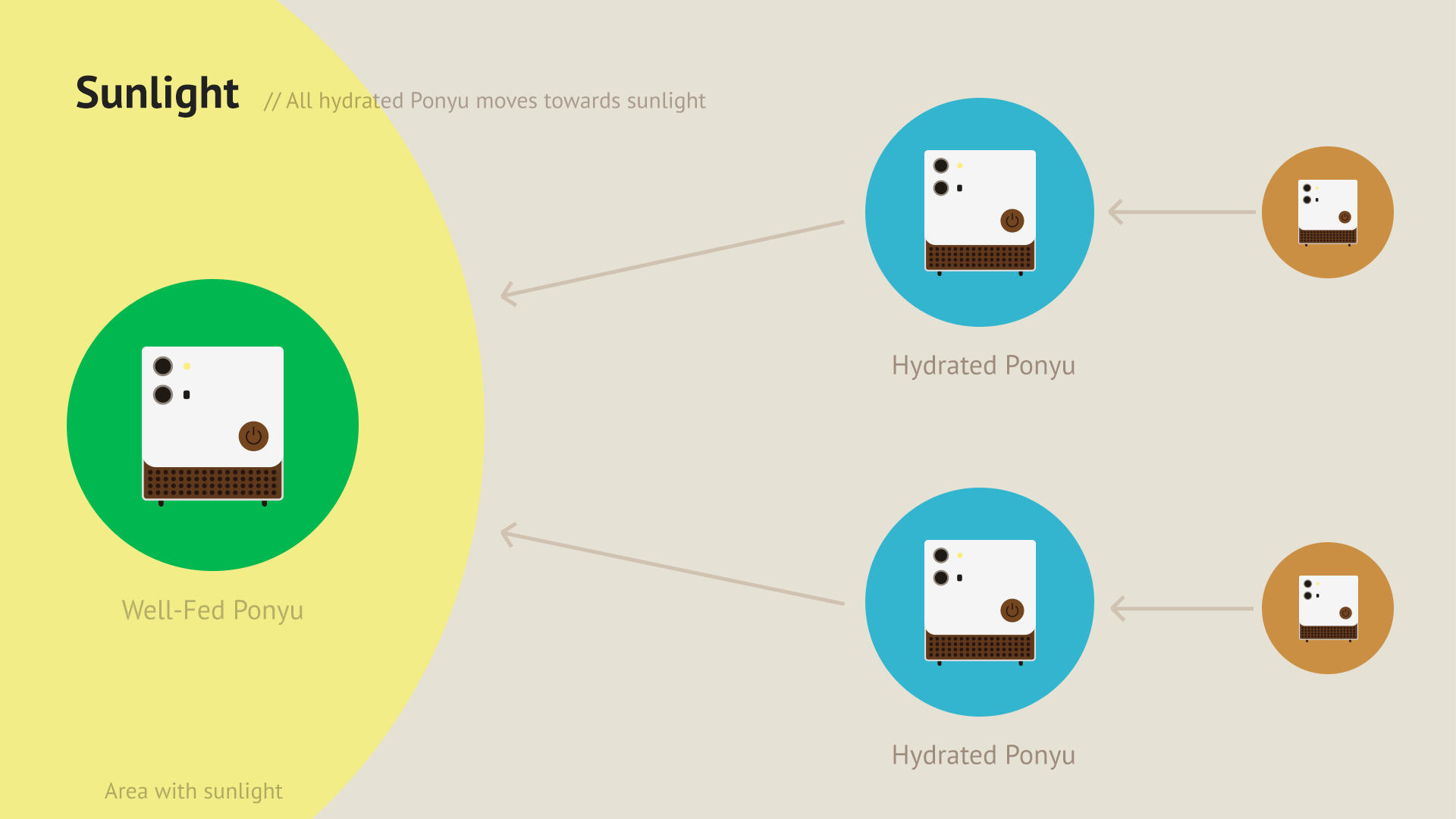

Secondary Drive: Sunlight

The secondary drive of Ponyu will be sunlight. As soon as the plants are hydrated, they start moving within the house to find a place with sunlight.

Disecting Ponyu

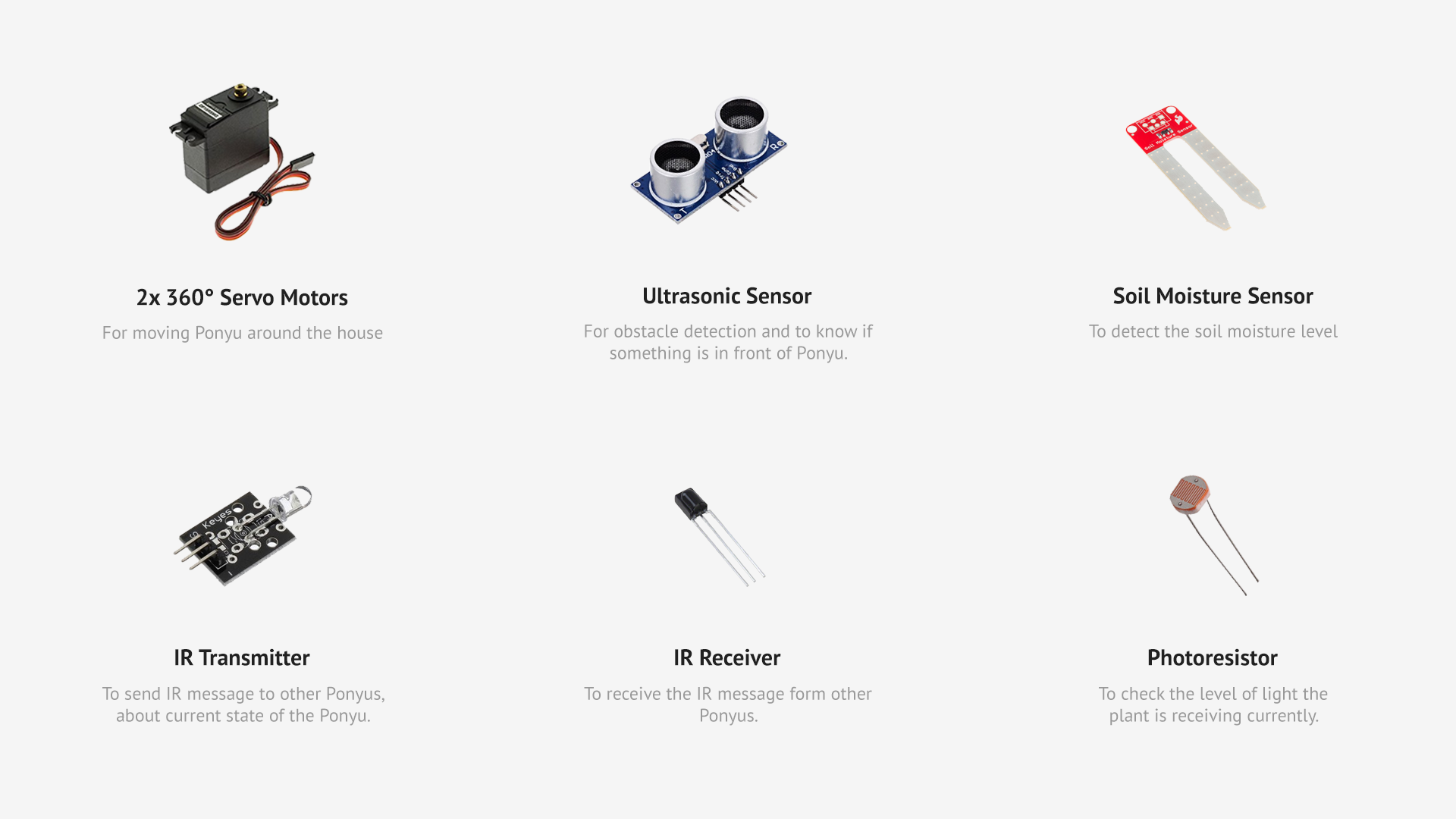

Sensors and Actuators

Ponyu uses quite a few sensors in order to be as autonomous as possible. A conscious decision was made to not use the internet as a mean for communication between different Ponyus, and we tried to stay as offline as possible.

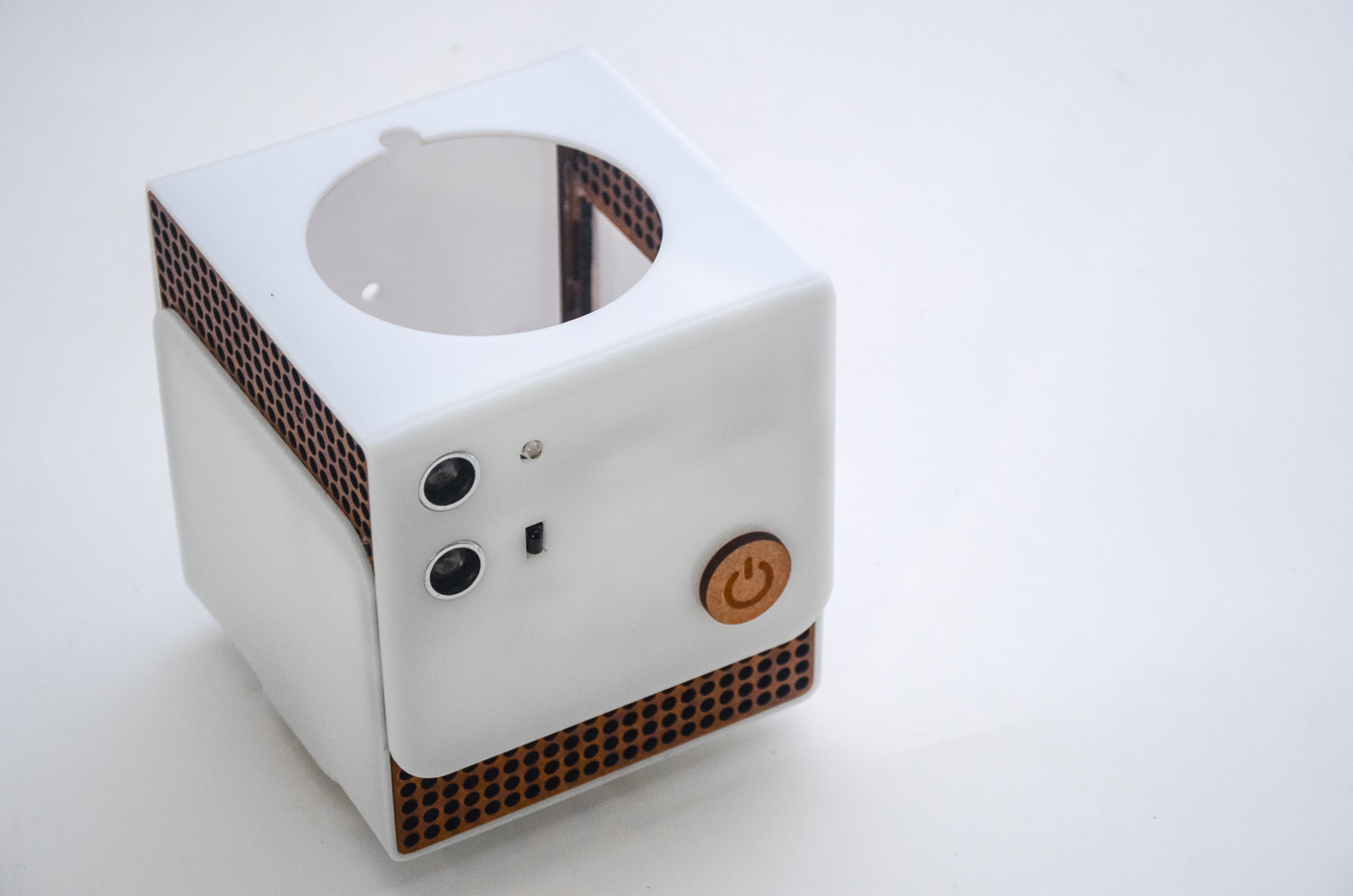

Outer Body

Process - Prototyping & Iterations

For this project, we followed CIID's mantra of

Build, Test, Repeat the most.

We followed a systematic approach towards making the product by

making a simple version first and then adding complexity to it in

layers.

The first version

In the first version, we just tested with a simple frame and two servos. In the GIF below you can see us testing a random walk function.

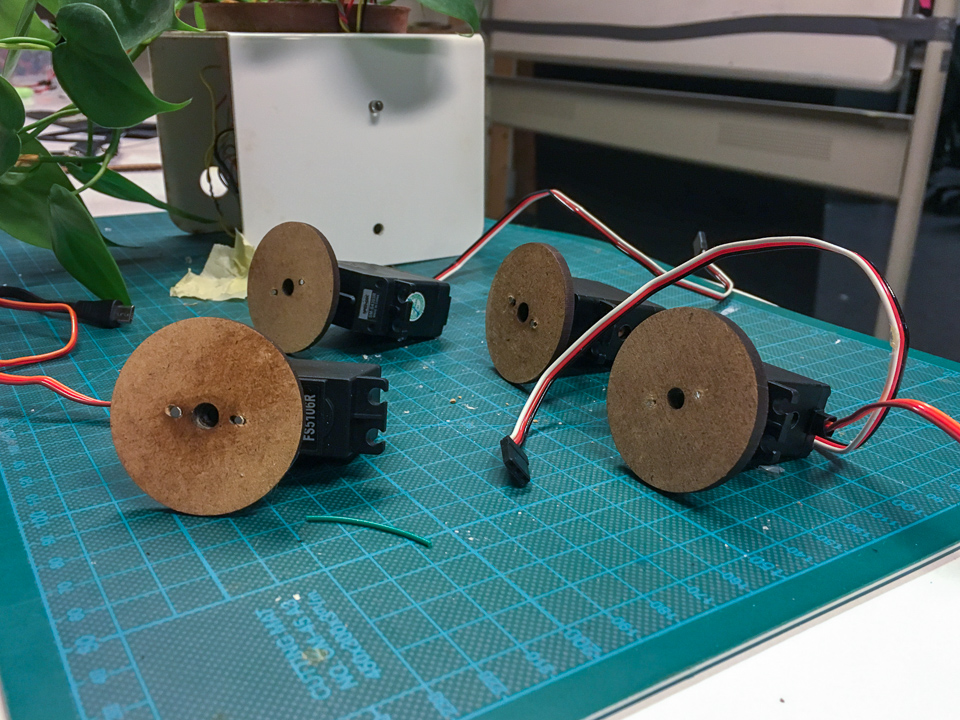

Working on the final version

Later on, we started to add other sensors in it and then assembled it into a new body. You can see pieces of our process in the image below.

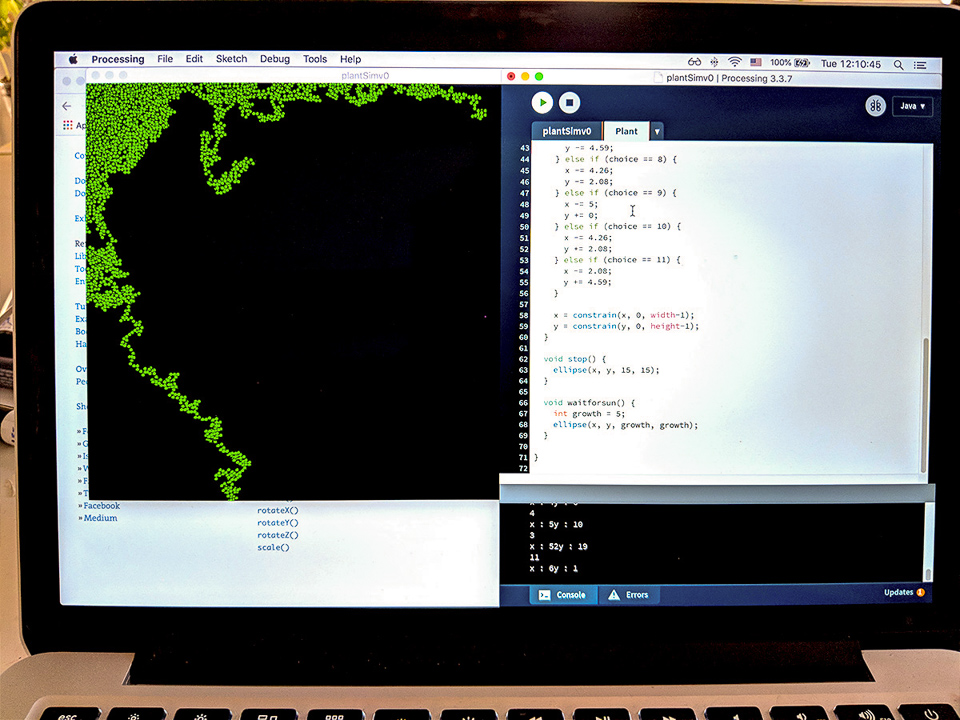

Simulation of flock behaviour

It was quite impossible to make multiple Punyos for displaying

flocking behaviour. So we made a simulation using

Processing to showcase how a flock of Punyos will

behave over time.

White Dot - A hungry Punyo

Blue Dot - A hydrated Punyo

Green Dot - A well-fed Punyo

Yellow Blob - Sun

// Social & External Links